This week Jellyfish released their 2025 State of Engineering Management report. Having been a Jellyfish customer for many years, I always look forward to this annual report, similar to how I anticipate the DORA report every year. It’s consistently a treasure trove of industry insights that keep me informed on key strategic trends. This year’s edition did not disappoint, with a wide range of insights drawn from a survey of 600+ software professionals worldwide. And unsurprisingly, the lion’s share of analysis looked at the impact of AI in software engineering.

AI is absolutely dominating the discussion in the software industry, so insights on topics like adoption, use cases, tools, and impact are welcome lighthouses in the storm. And we see the dominant focus on AI in other industry reports such as the DORA 2024 State of DevOps Report, the 2025 LeadDev Engineering Leadership Report, not to mention numerous engineering leadership blogs and publications that have largely turned their attention to AI.

But the AI revolution can also honestly also be a bit overwhelming for Engineering Leaders at times. None of the rest of our job has gone away. We still have all the ongoing concerns of ensuring great hiring practices, making sure the team has solid ways of working, implementing solid technical practices, etc., as well as transformational topics such as adopting best practices from domains like DevOps, Platform Engineering, Data Engineering, and so on. None of that went away. We’re still leading and managing a large complex system.

But AI really does seem incredibly promising and transformational to the art of creating software. And practically speaking, it has the main spotlight, if not the entire stage, in the minds of our critical stakeholders such as CEOs, Boards of Directors, and fellow executives. They’re hearing outsized stories of the impact of AI. And they’re hearing about organizations setting strong AI mandates. Most of us are genuinely enthusiastic about dedicating a bunch of attention to AI, but practically speaking it’s not as if it’s really discretionary at this point anywy. Our stakeholders demand engagement and results.

Meanwhile, there’s a clear and strong current of concern among IC engineers. They fear that the organization will set irrational expectations around output due to AI, and they’ll be on the hook if those irrational goals can’t be achieved. After all, they’re the ones most directly experiencing the limitations of current tools. It’s one thing to vibe code a greenfield hobby project, but a completely different matter to make a high quality and stylistically appropriate change in a large, complex, legacy code base with imperfect documentation. And on top of it all, like all of us, they lack clarity in terms of what this will all mean for our profession and their jobs! So one can understand if many ICs are not leading the charge on AI zealotry.

What Can Industry Surveys Tell Us?

My question is, can industry analysis like the Jellyfish State of Engineering Management report help us figure out where this topic is going? Do we need to gently tap the brakes on the very bullish takes from our stakeholders? Encourage a softening of the bear takes from engineers on our team? Or maybe something in between?

Looking at prior reports, we don’t find clear consensus on this question. The LeadDev Engineering Leadership Report paints a moderate to bear picture of AI in engineering. They highlight the result that 60% of their respondents do not believe AI has significantly boosted (i.e., >10%) productivity on their teams. And they show a majority or near-majority of respondents perceive a variety of concerns around AI assisted software development including quality, growth opportunities for junior developers, security, and maintainability. Overall, it feels like a relatively bearish take.

The DORA report is more mixed, with some bullish results and some less positive data points. On the one hand, DORA’s survey found that respondents on average perceive a 2.1% increase in productivity per developer for every 25% increase in AI adoption. Similarly, respondents report a 2.6% increase in flow and a 2.2% increase in job satisfaction for each 25% increase of AI usage. These results may sound a bit modest at first, but when you consider how strong adoption of AI tools has been, it’s likely that typical increases are way more than 25%, and hitting large fractions of many teams.

But then the DORA report also has more concerning findings. For example, they find that time doing valuable work actually decreases by 2.6%, and delivery throughput decreases by 1.5% for each 25% increase in AI usage. It’s of course hard to really know why these negative impacts occur. For example, one possible cause cited was the possibility that AI is causing people to slip into working in larger batch size changes, i.e., speedup on the coding part of the work may be slowing down other parts. It’s hard to know what trend this will follow over time, especially since tools and use cases across the SDLC are evolving, but we cannot escape the fact that DORA provides a mixed signal on the promise of AI in software engineering, at least for now.

The Bull Take

Returning to the Jellyfish report, it’s safe to say that these results are the most bullish of the group of studies discussed here. Similar to other reports, Jellyfish found AI adoption has been exploding and is approaching universal, with more than 90% of teams reporting active use of AI in the development process.

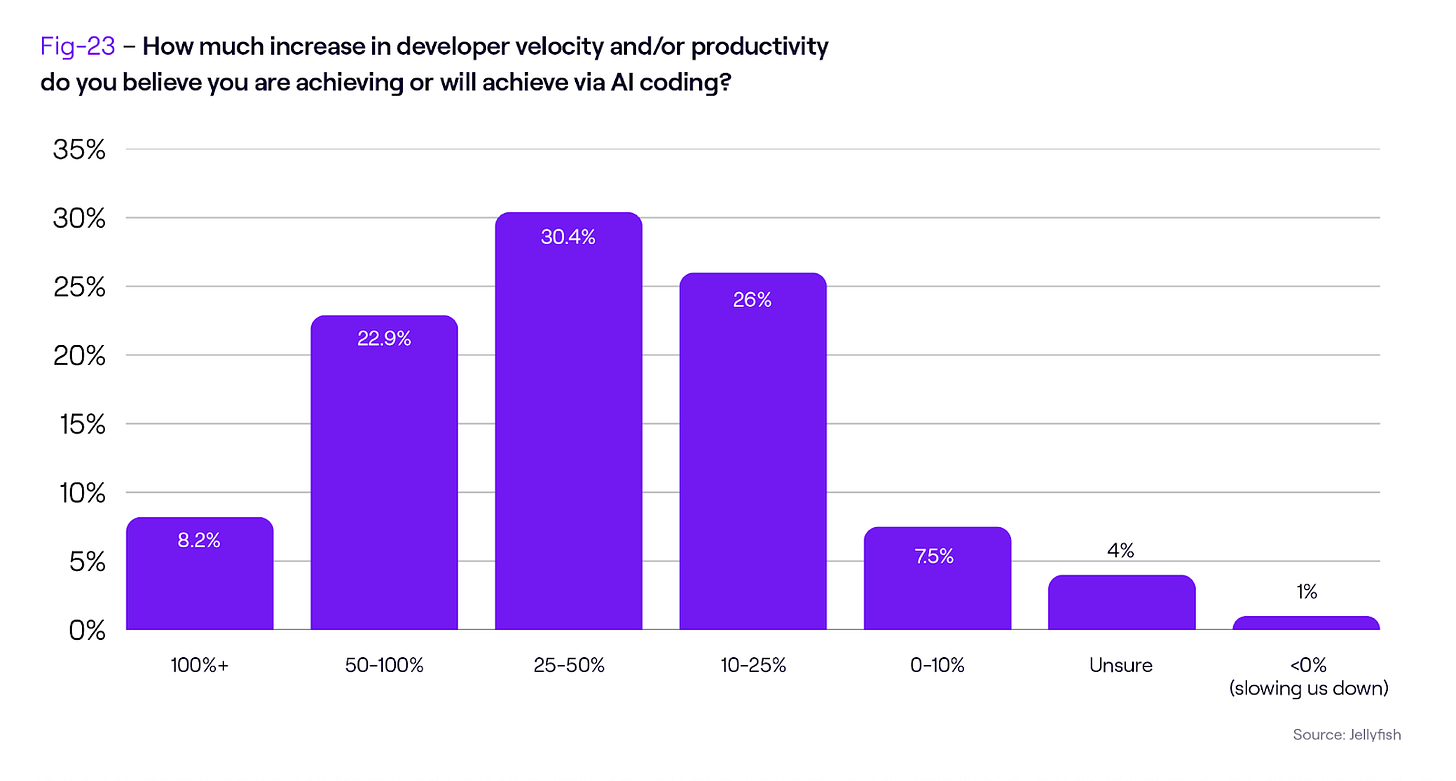

More striking than adoption, a full 67% of the Jellyfish respondents believe that they are achieving 25% or more productivity improvements in their orgs due to AI. This is a pretty exciting result, especially when you consider that it averages across orgs of varying AI usage maturity, as well as code bases that vary in how effective we can expect AI to be. But it’s also not surprising when you look at this survey data in relation to data we can see in Engineering systems. For example, Jellyfish recently looked at the impact of AI on git flow metrics and found insights such as high AI use speeding up PR cycle time by 16% on average, and having no measurable impact on quality. So the fact that people are feeling more productive isn’t an illusion – it bears out in the hard systems data.

So this leaves us with a puzzle. Which assessment should we believe – the bull case, the bear case, or the mixed view?

It’s hard to be sure at this point, especially since the data in various reports looks at different populations, with different survey designs, at different points in time, etc. Without knowing for sure, I tend to look at the trend lines for clues. For one thing, the adoption trend is striking and consistent in all of the reports. Some engineers may be skeptical about what gains are possible, but they’re certainly using the tools more, and not all of this is because of organizational mandates. The newer options for tools and the improvements in underlying models’ coding capabilities are certainly making the tools more appealing. Engineering teams are sensing some kind of compelling potential around AI coding, otherwise adoption wouldn’t be exploding.

And I also like to look at related attitudes, such as what will AI mean for organizational growth?

Beyond the SDLC: A Hybrid Approach

Some of the less sexy but equally interesting results in the Jellyfish report give insights into perspectives on growth and hiring. The tech job market has been in a downturn for some time, and narratives around AI killing jobs are only adding to concerns among tech professionals. But if you look at the Jellyfish data, it tells an interesting story.

For one thing, the data exposes a positive view around growth. 61% of respondents reported their engineering budget increased as a percentage of company revenue from 2024 to 2025, compared to 19% who said it remained the same and 17% who saw a decrease. I personally found that result surprising in the current environment.

And interestingly, while there is clearly some indication of budget for AI investments coming at the expense of hiring (23% of respondents), there’s far greater indication that AI investments will come from net new spend (38%) or from trade-offs against other software spending (53%). And meanwhile, a healthy 48% of respondents see their budget for engineering systems increasing.

Returning to views on human staffing, we see correspondingly positive signals. For example, the Jellyfish survey looked at continued investment in offshoring. For example 42% reported anticipating expanding offshoring efforts. This isn’t the type of investment that you prioritize if you’re envisioning aggressively replacing people with AI. Engineering leaders are still signaling importance around growth of human engineering talent.

There’s no question the consensus view holds that a significant portion of the work of creating software will be shifted to AI. 81% of the Jellyfish respondents believe that at least 25% of the work humans are doing today will be handled by AI five years from now. But looking at other signals, it seems likely that this view is in light of the whole pie getting larger. Organizations will be able to take on more – creating more value per capita and per dollar invested – with plenty of critical work for the human engineers operating in a hybrid mode. AI won’t replace humans, but augment them. And the work will transform accordingly.

Netting It Out

Looking at survey data like the Jellyfish report always feels like a bit of a Rorschach test to me. The data rarely draw definitive conclusions about what the future holds. But they give us signals, which we have to interpret as objectively as we can.

Personally I see strong signals for a bullish take on the promise of AI in software engineering in the Jellyfish data. And these track well with personal experiences and the stories I’m hearing from peers in the industry. It’s not all perfect, it’s not all complete, and there’s still lots to be figured out, but there are just too many exciting and positive stories to ignore. The bull take seems sounder to me every day.

That said, it’s critical that we continue to maintain rationality and attend to IC concerns about the reasonableness with which we push this new technology into our organizations. Practically every day you hear about new adoption mandates being put into effect like those at Shopify, Intercom, or Duolingo. I believe we should steer clear of heavy handed mandates and arbitrarily set goals. Instead we should encourage adoption, remove barriers to experimentation, and invest in organizational enablement. We should be holding AI hackathons. Nominating AI Champions from among our best developers. The new tools are exciting and will let us do amazing things. Let’s help our organizations find their way to better and better execution with AI. It will turn out more productive and more enjoyable for everyone involved!

Love this, Adam. Appreciate the nuance and the highlights from the report!